Understanding the Performance Gap

CPU vs. DRAM Growth Rates

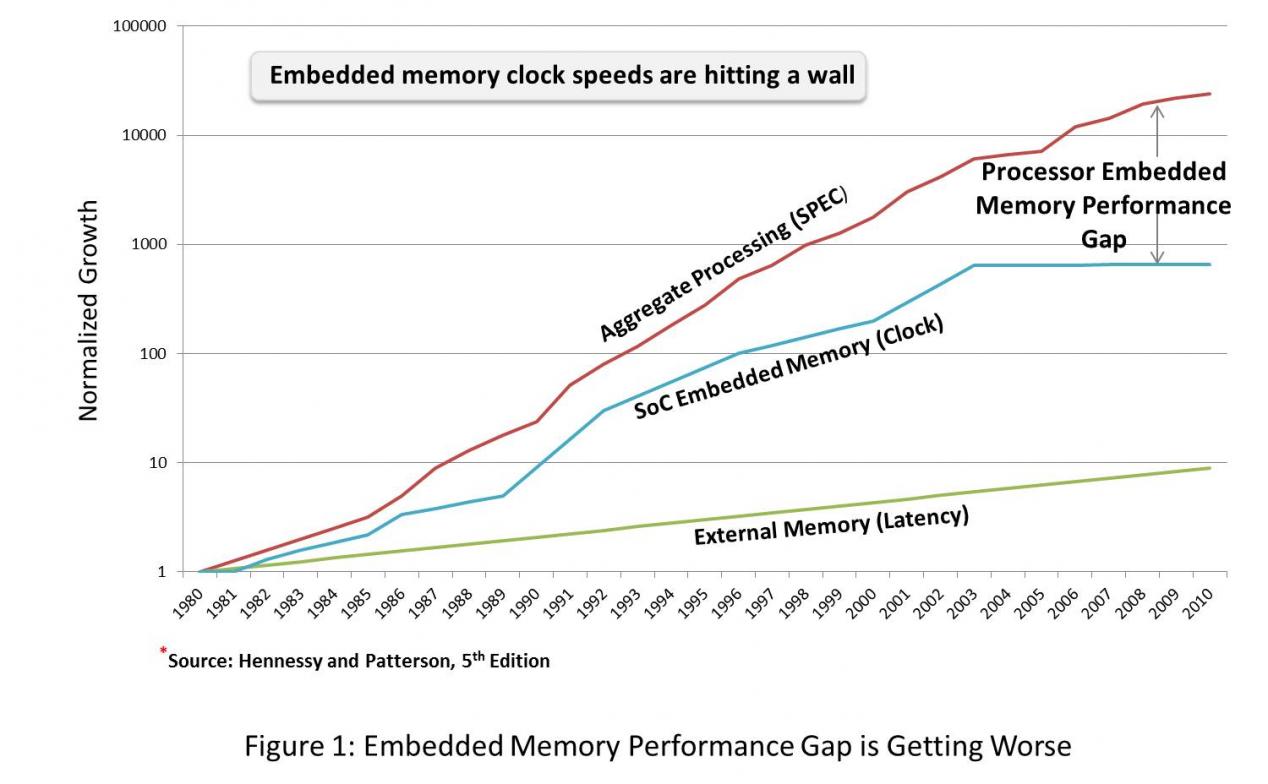

CPU Performance: Advances in processor design, such as higher clock speeds, instruction-level parallelism, and architectural optimizations, have resulted in an approximate 60% performance improvement per year, effectively doubling CPU performance every 1.5 years.

DRAM Performance: Meanwhile, memory technology has lagged behind, with performance increasing at a much slower rate of 9% per year, meaning DRAM speed doubles only every 10 years.

This disparity has resulted in a situation where CPUs execute instructions faster than memory can supply data, leading to significant memory access delays.

Why Main Memory is Slow

- DRAM Design Constraints

DRAM (Dynamic Random Access Memory) is the dominant technology for main memory because of its cost-effectiveness and high density. However, its architecture inherently introduces latency:

Optimized for Density and Cost, Not Speed: DRAM uses a 1T-1C cell structure, which prioritizes storage capacity over access speed.

Long Access Times for Larger Memory Sizes: As memory size increases, access times also increase due to larger address decoders, longer routing paths, and extended wordlines/bitlines.

- Off-Chip Latency and Bandwidth Limitations

Fetching data from main memory introduces additional delays due to:

On-Chip Memory Controllers and I/O Pins: Limited data transfer pins create bottlenecks in bandwidth.

Memory Bus Overhead: The communication between CPU and DRAM over a shared bus results in congestion and increased latency.

The Impact of the Processor-Memory Performance Gap

The widening gap affects various aspects of computing:

Diminishing CPU Efficiency: Faster processors spend more time waiting for data, underutilizing computational resources.

Increased Power Consumption: Frequent memory accesses consume more power, which is critical in mobile and battery-powered devices.

Performance Bottlenecks in AI/ML Workloads: Data-intensive applications such as AI, machine learning, and big data analytics suffer from slower memory access, reducing throughput.

Strategies to Mitigate the Performance Gap

Several architectural and technological innovations aim to reduce the impact of the processor-memory gap:

- Memory Hierarchy and Caching

L1, L2, and L3 Caches: Store frequently accessed data closer to the CPU to minimize latency.

Prefetching: Predicts future data needs and loads them into cache before they are requested.

- Faster Memory Technologies

High-Bandwidth Memory (HBM): Stacks memory dies with a wide data interface, improving bandwidth.

DDR5 and Beyond: Newer memory generations offer higher data transfer rates and lower latencies.

- Processing-in-Memory (PIM) & Near-Memory Computing

Reduces data movement by integrating computation directly within memory.

Helps improve performance for workloads that involve massive data transfers.

- Non-Volatile Memory (NVM) Innovations

Emerging technologies like Resistive RAM (ReRAM) and Phase-Change Memory (PCM) offer the potential for faster access times and persistence.

The need for faster access..?

Locality of Reference

- Temporal Locality

Recently accessed items are likely to be accessed again soon.

Implication: Keeping frequently accessed data closer to the CPU (e.g., in caches) reduces memory latency and improves performance.

- Spatial Locality

Memory addresses that are near one another are likely to be accessed together within a short time frame.

Implication: When fetching a memory block, it is efficient to retrieve nearby data as well, minimizing subsequent memory access delays.

- Sequential Locality (Special Case of Spatial Locality)

The next memory access is often to the next sequential address.

Implication: Fetching larger contiguous memory blocks in advance (e.g., through prefetching) can optimize memory bandwidth usage and reduce latency.